Learning the Latent structure in LLMs

Web Development

Mentors :

Darshan Makwana

Mentees :

10

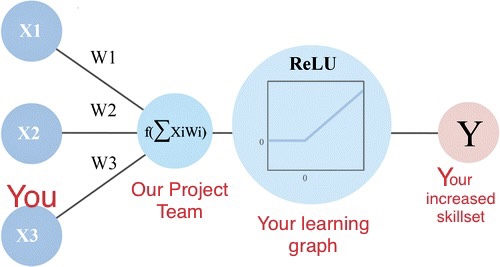

"We will be learning what are large language models and how they can be used as knowledge bases. At the end we will be building and training a BERT and a miniGPT entirely from scratch though our miniGPT won't be as powerful as the GPT models out there, we will learn how we can improve our model and some of the techniques that can be used to align them towards instructions at the very end of the project, if time permits :(. The aim of the project is to make you so well versed in LLMs that you can build and train one from scratch on the go.

python is a very hard pre requisite for this project as we will be putting our intuitive thoughts about natural language understanding into logical structures of rules that the computer will perform via python language

Details about the project and the assignment can be found here:

https://github.com/darshanmakwana412/LLM-SOC"

Prerequisites:

Enthusiasm is the most fundamental prerequisite apart from basics of probability theory, statistical machine learning. Python is a very hard prerequisite

Tentative Timeline :

| Week | Work |

|---|---|

| Week 1 | Gentle Introduction to NLP with word2vec, word embeddings, Distributional sementics |

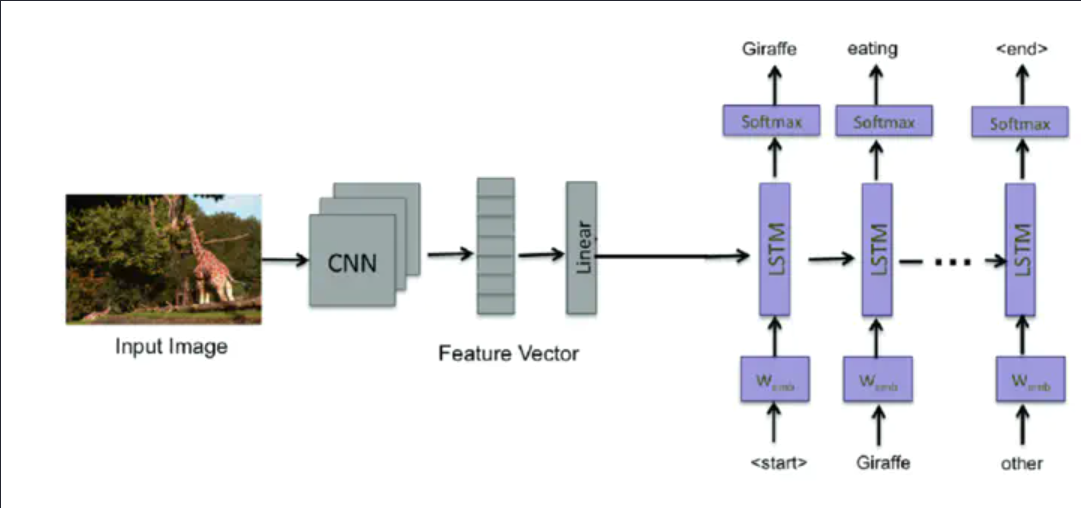

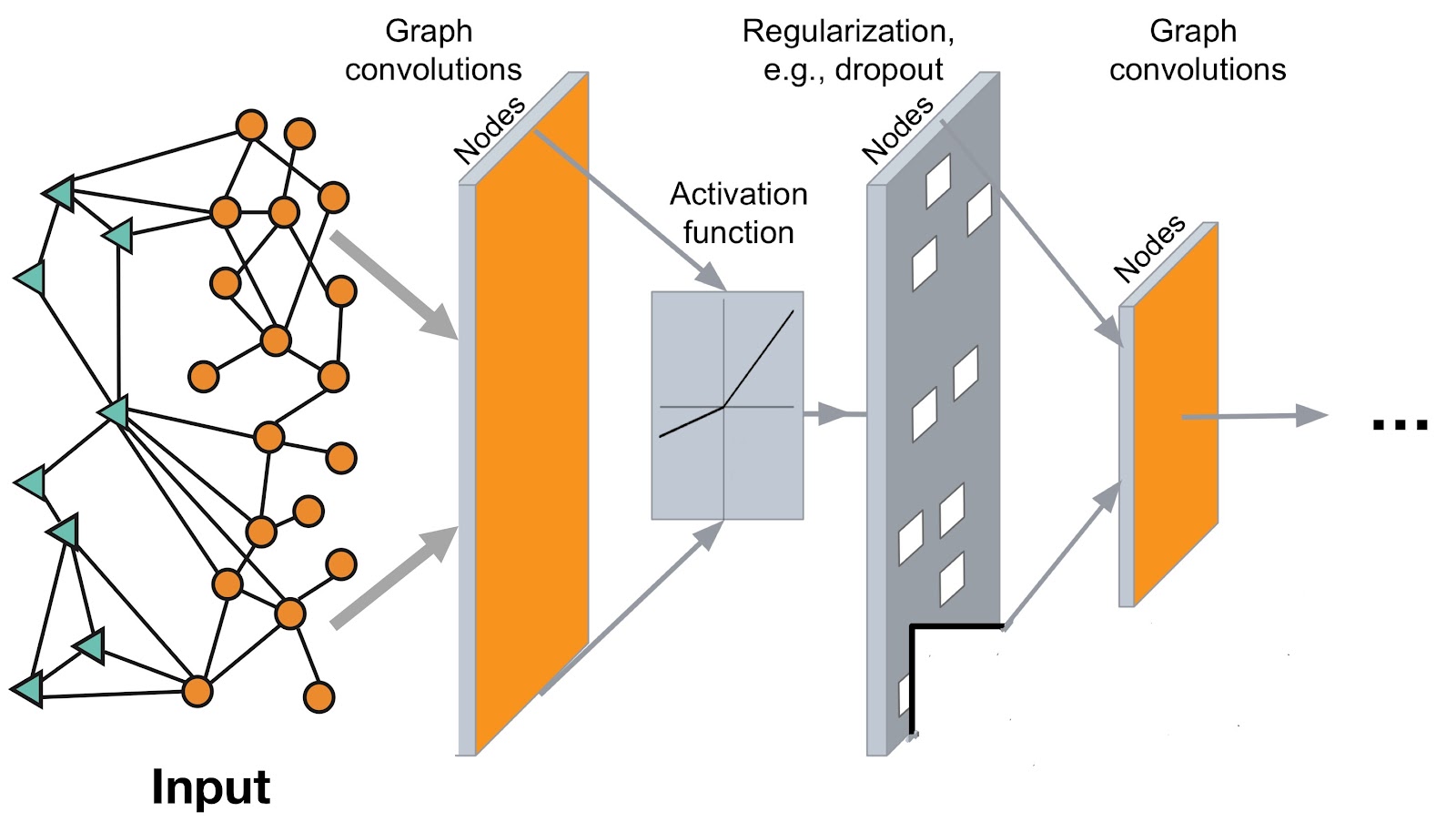

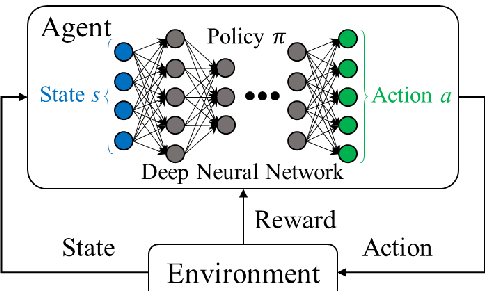

| Week 2,3 | Introduction to pytorch and neural networks, convolutional layers and pooling, building cnns and training them on dummy datasets, Text classification, building generative and discriminative models |

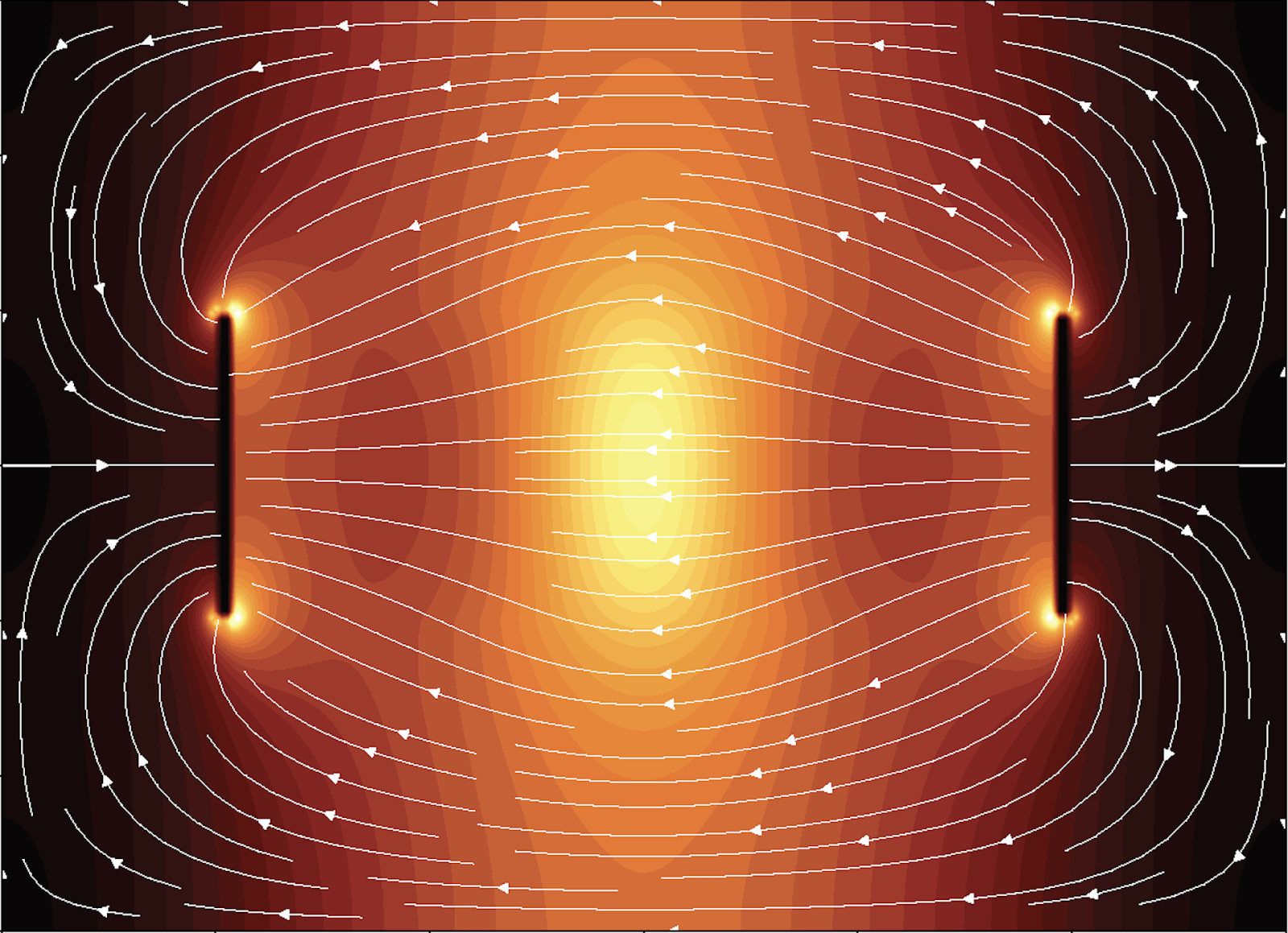

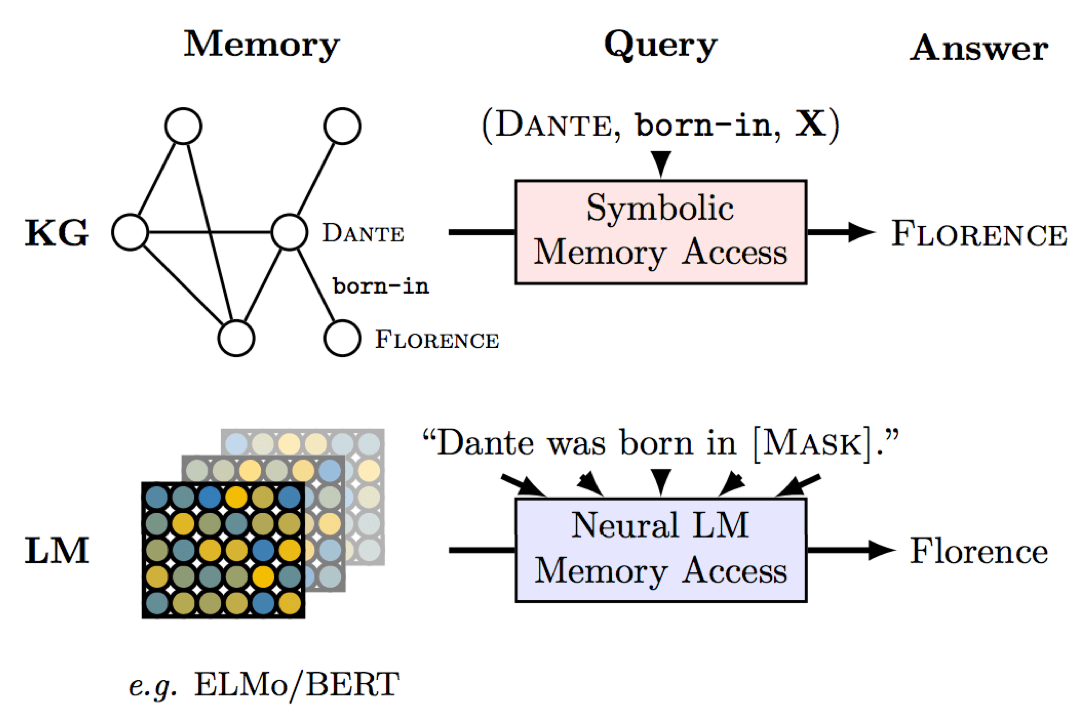

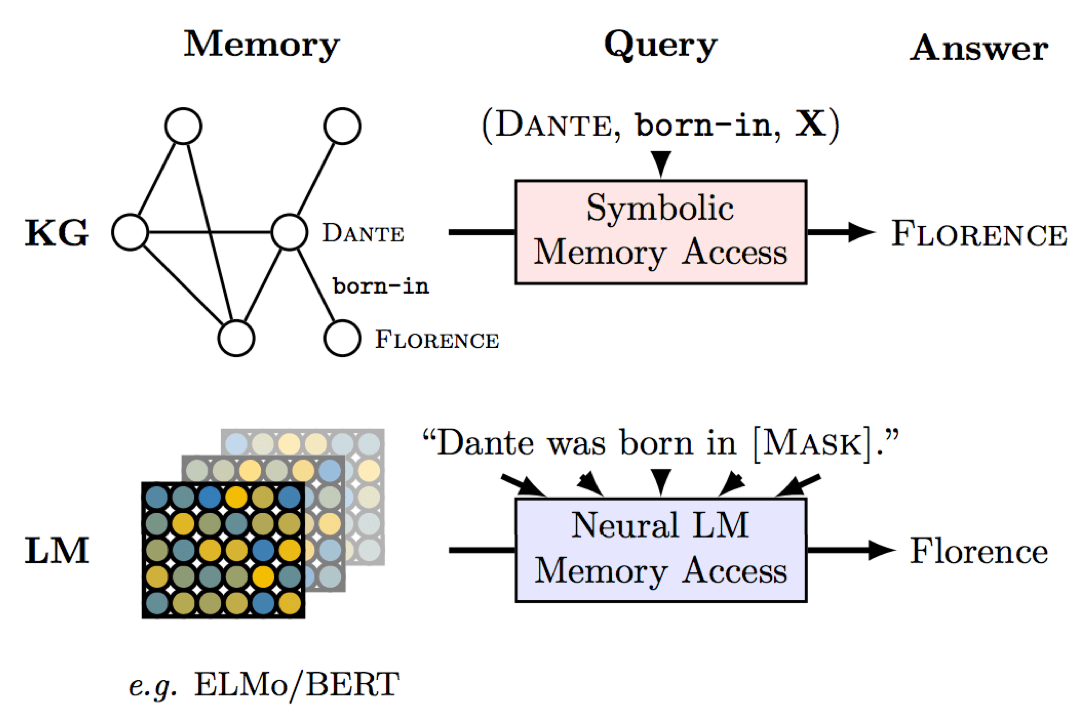

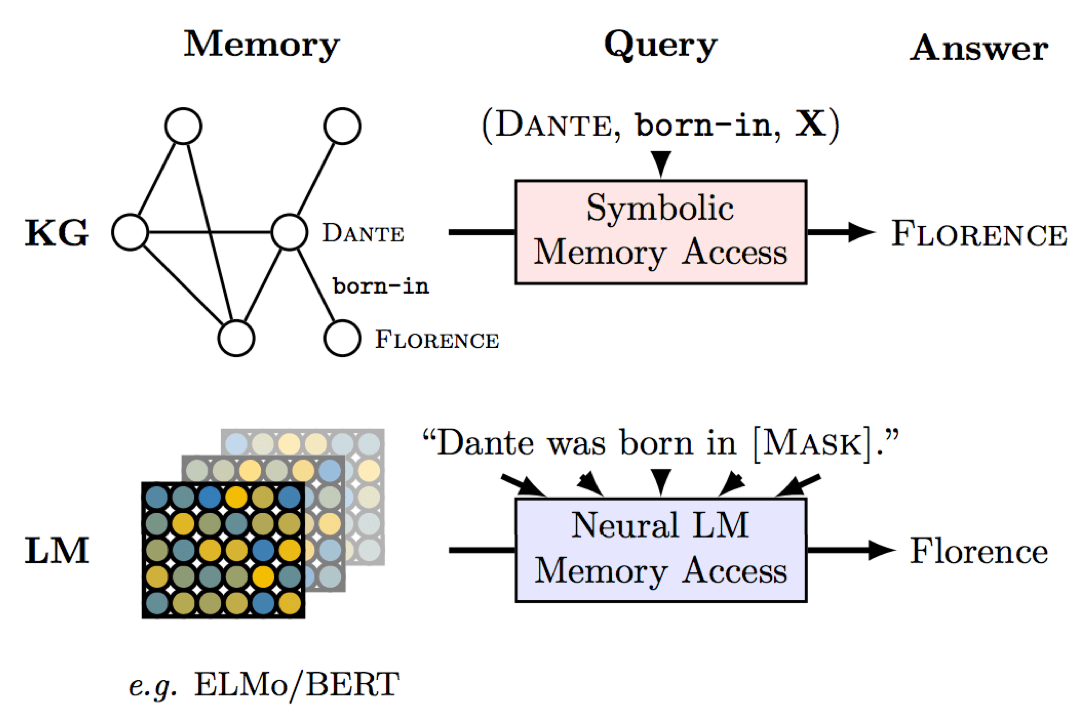

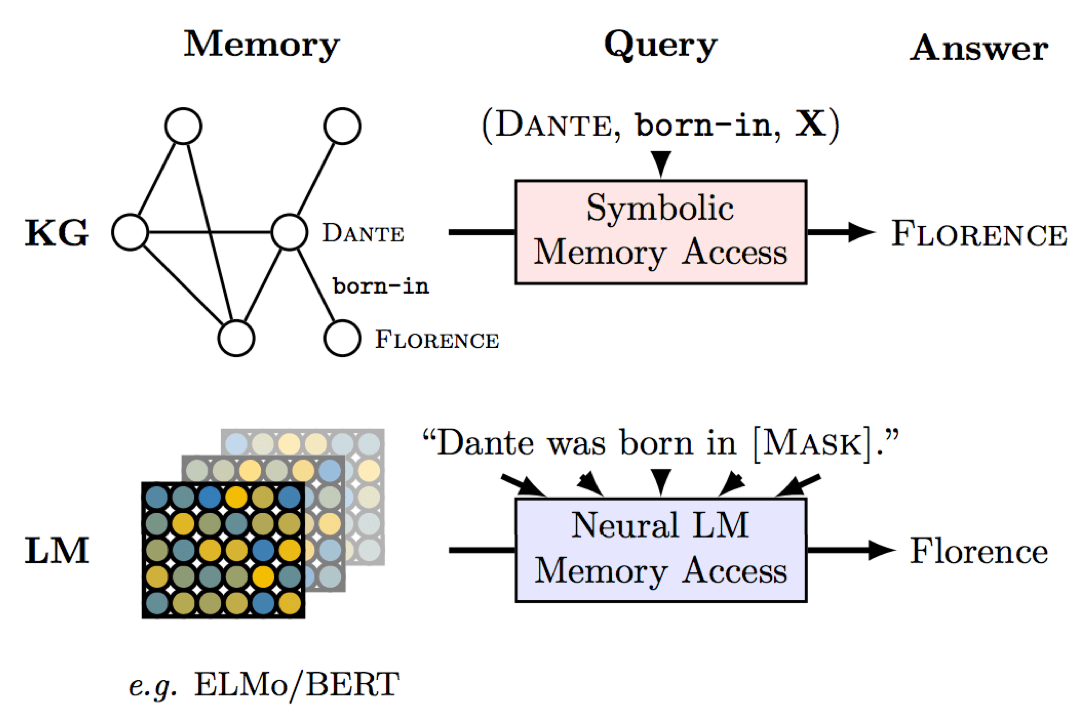

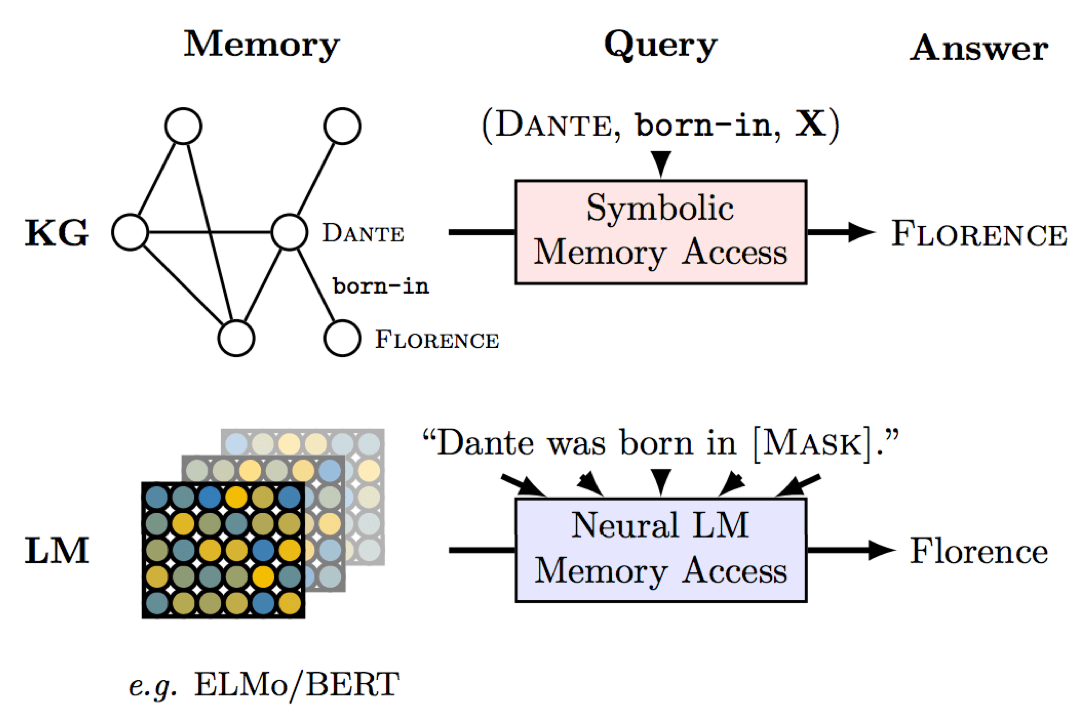

| Week 4,5 | Language Modeling, N gram LMs, Neural LMs, evaluating LMs, Building encoder-decoder models, autoencoder and inferencing |

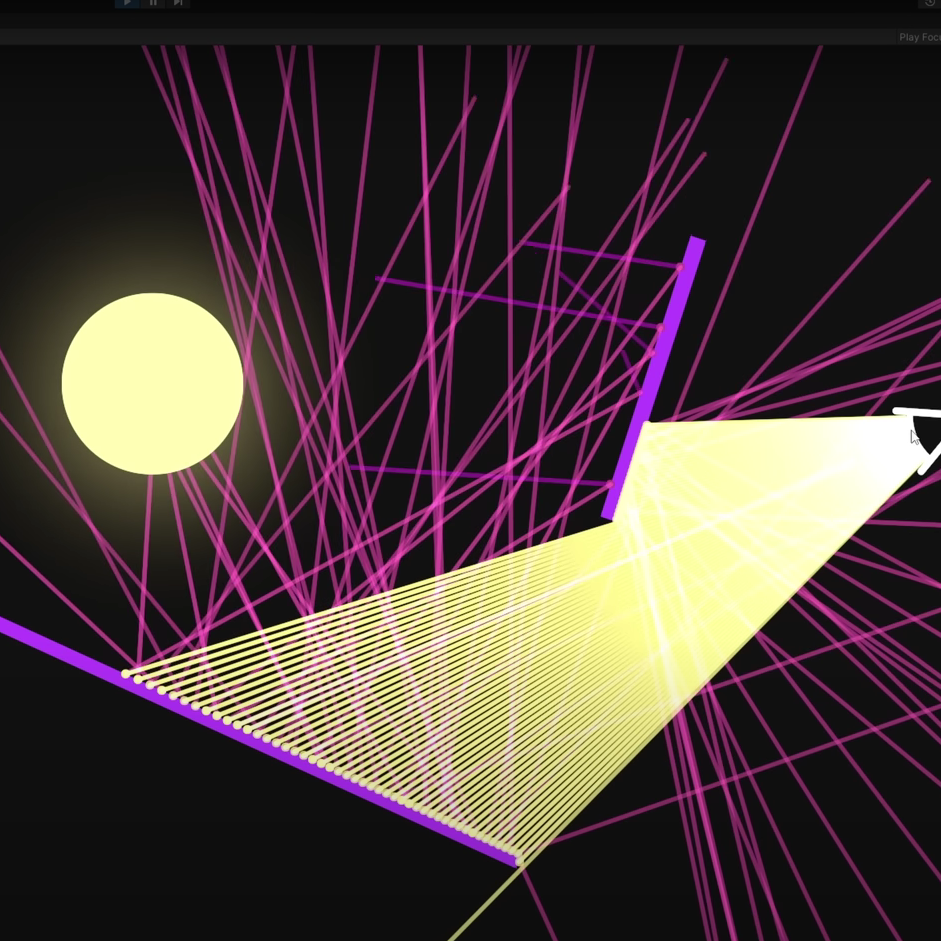

| Week 6,7 | Introducing attention(Transformer: Attention is All You Need) in encoder-decoders, building a transformer from scratch, Seq2Seq, Transfer learning, replacing pre trained word embeddings in GPT and BERT |

| Week 8 | Building and training BERT and miniGPT in pytorch from scratch |