PROJECTS

Sudarshana: QR-code Scanning Security Drone

Team Members: Anjali,Prapti Sao,Reet Mhaske, Bhuvana

Ever seen the Autonomous-fighting drones in Sci-Fi/ Spy movies?Wished to have it at your disposal? Just like you, we ,too, were fascinated by them, and ITSP was the perfect platform to materialise this idea , of course , a preliminary version of those far-from reality drone-bots.

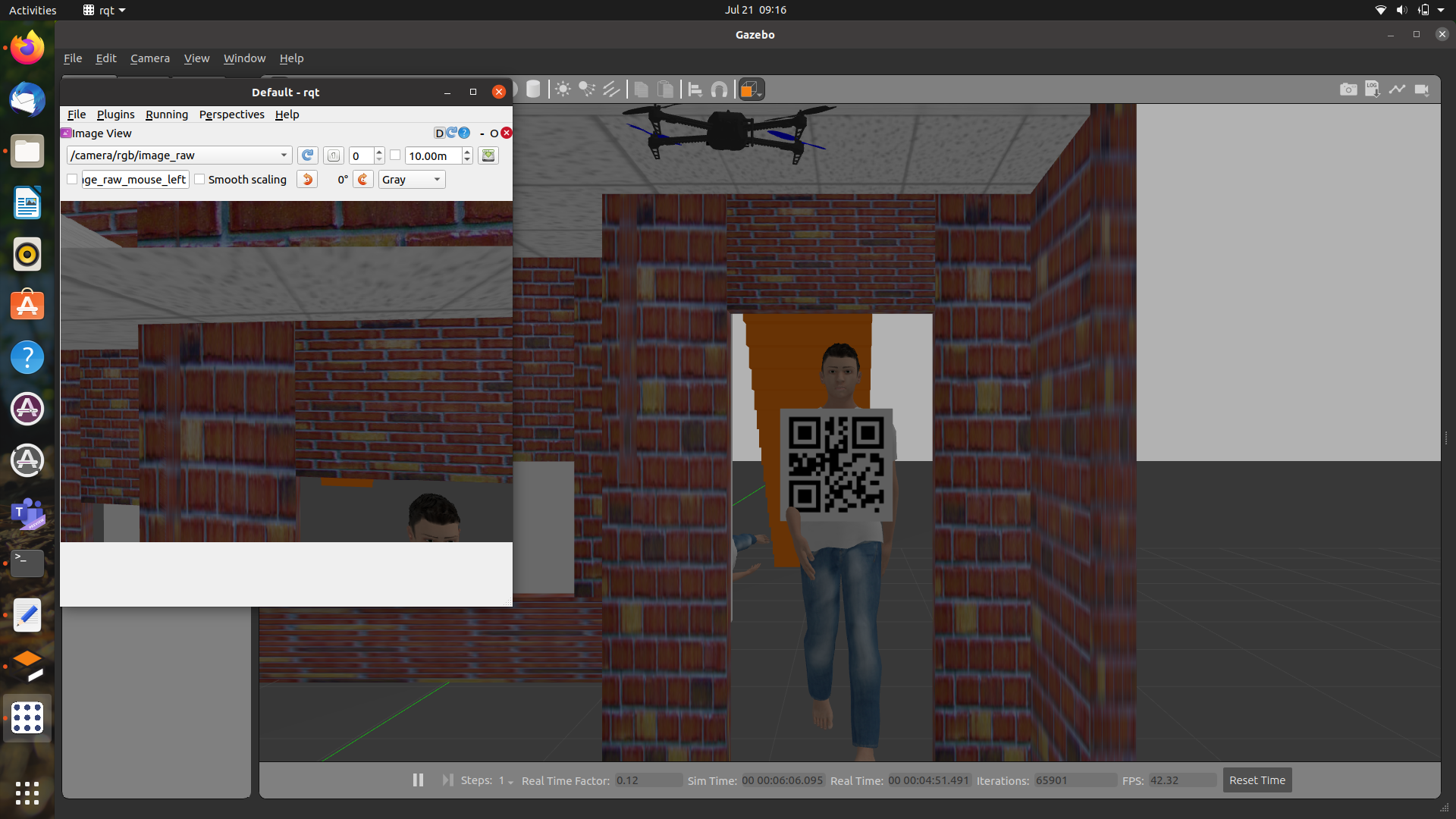

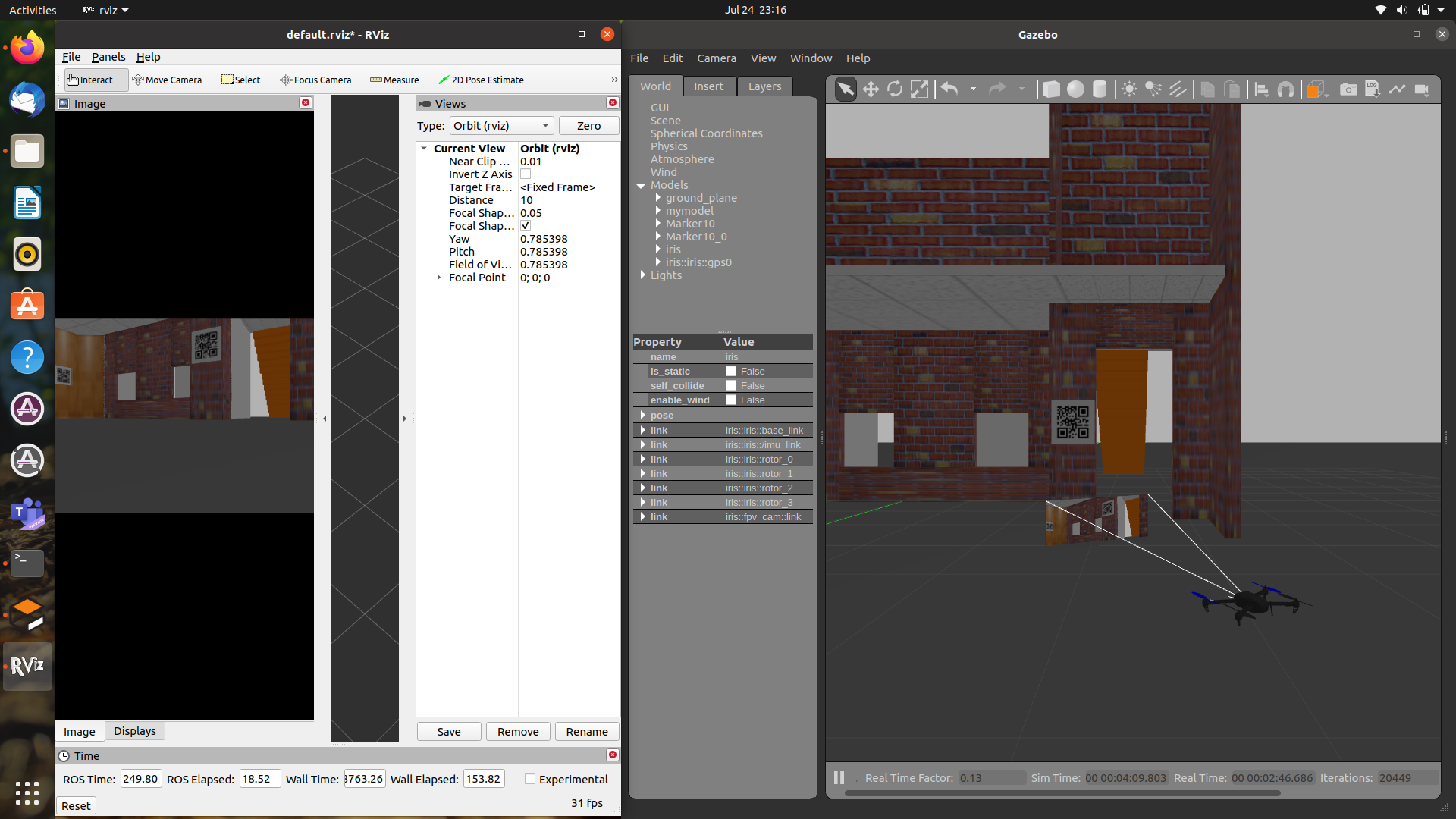

Neither armed with fancy weapons nor laser vision, we decided our drone to perform a quite simple task: to recognize human threats by scanning a QR code on their identity card.

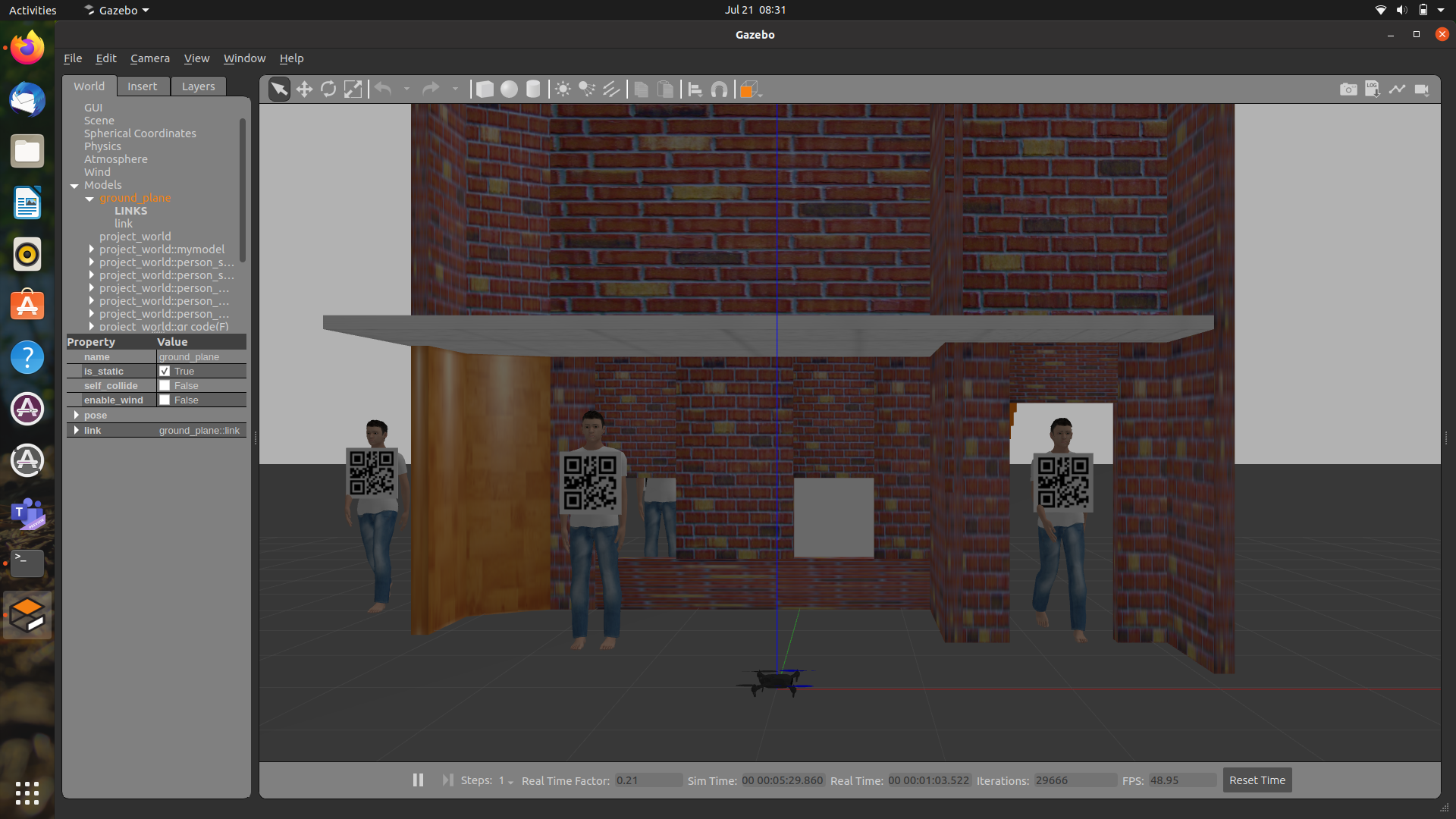

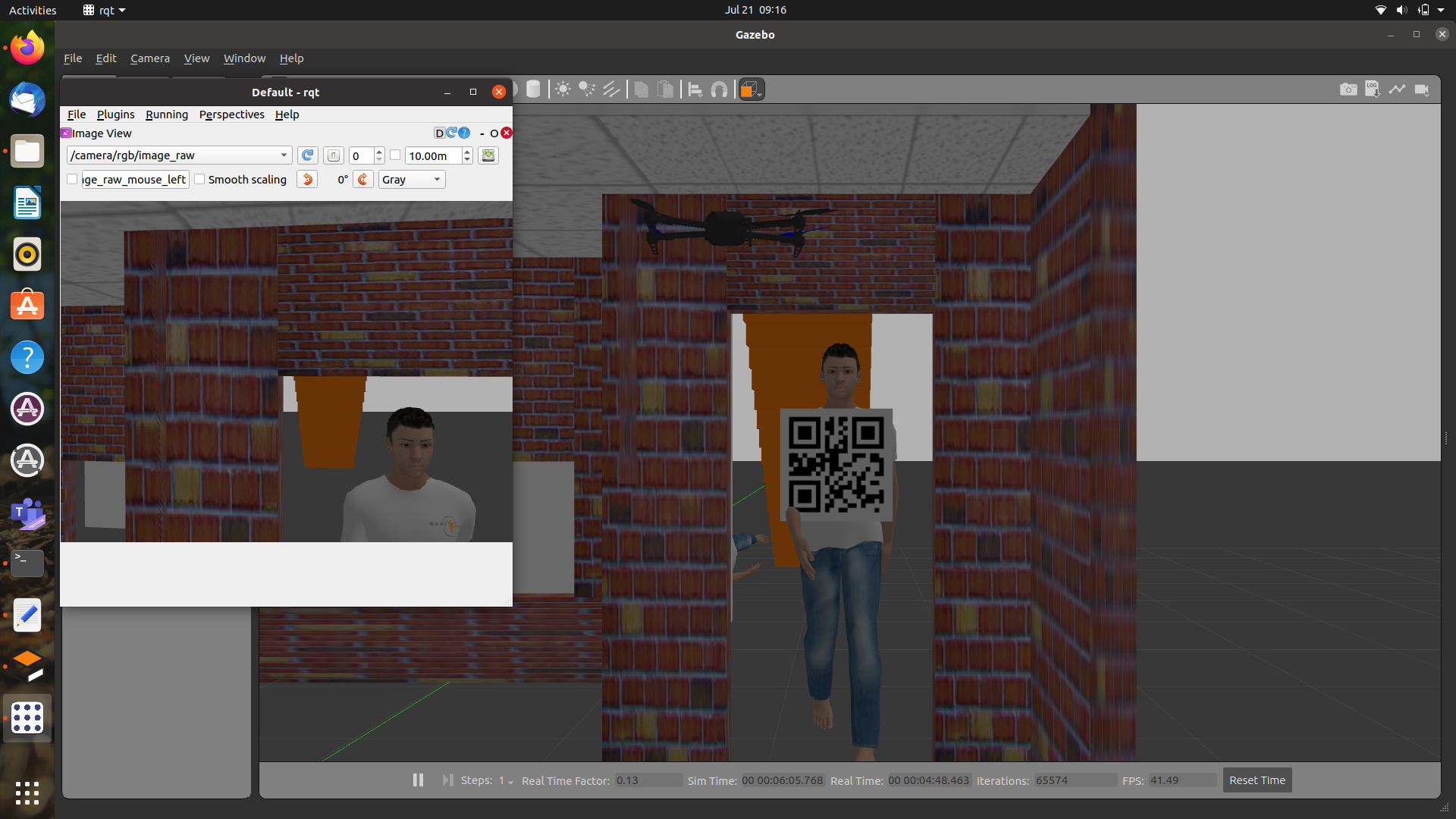

The Drone hovers along a predefined path , scans and reads the QR code on Human identity card, then reports the coordinates of the point where the human was detected.It uses a rgb depth camera to take a picture of qr-code and uses python libraries to scan that code and return the value that is written in that particular qr-code.

This prefatory drone will be of much value as it can classify the humans, based on their QR code values, into “ Authorized “ or “UnAuthorized”.

Being autonomous,such avant-garde security drones need little human intervention.

These will be of immense market value for security companies,once improved upon , as they are faster than human security and more faithful, making it worthy of its name, Sudarshana , the protecting Discus of Lord Krishna.

Inspiration for Idea: There was an incident in Shanghai, in which a drone was used for advertising purposes. Drone makes a QR code in sky and humans can scan that code, it directs them to a gaming site they introduced. Also this was something quite new and impressive for humans, so it attracted good involvement and publicity.From this, it gave me an idea that by QR code scanning only we can do something we can use in the workplace also for the security purpose.

Learnings/Key Takeaways/Experience:

Gesture Controlled Drone

Team member: Dibyojeet Bagchi | Prashant Shettigar | Immanuel Williams | Karan Agarwalla

Devices like drones are widely controlled using remote but what if we could control them using gestures!Won’t it be cool and much easier to fly a drone by moving just your hands.It would be much easier to control a gesture based drone compared to a remote control based device.

The idea was initially to develop a full-fledged gesture control drone but for constraints of budget, we are having gestures for two motions roll and pitch. The throttle and yaw is sent through the RC receiver.

Initially, we selected this project as it seemed cool to control a drone with gestures but we later realised that this gesture technology can be extended to many devices, say gesture-based switches for electrical appliances. Smartphones could come with hand gesture control . The motion-based control using MPU can be used to control many devices in the future. Like switching on lights just by hand movements.

Learnings/Key Takeaways/Experience:

Bionicopter

Team members: Abhinav , Rishyanth , Yagneswar , Karthikeya

This project is to make a bionic-opter which is a remote-controlled robotic model of a dragon-fly.

Our main inspiration to make this is our interest in drones and aerial vehicles, we were interested in making an unmanned remote-controlled copter in a different way from others. So, we used the flapping mechanism of a dragon-fly to make the drone fly and hence we named it bionicopter(bionic-copter).

We are going to control the bionicopter through blue-tooth from an app for which the code had been written and uploaded to Raspberry-pi and the wing flap frequency and servo motor can be used to rotate the flapper orientation which changes the flapping direction and hence movement direction.

High on Wheels

Team members: Ekta Agarwal | Arjun gupta | Naman Saraf

A quadcopter that can fly as well as walk. We have made a X shaped quadcopter that has a spider bot attached at bottom of it.We have made a X shaped quadcopter that has a spider bot attached at bottom of it.

Learnings/Key Takeaways/Experience: